AI-Search, Demystified: A Manifesto for Marketers

This article breaks down how AI-native search works today, what behavioral shifts underpin it, and most importantly, how you can optimize for visibility across the major engines powering discovery in 2025.

In 2025, AI-powered search isn't just changing the game—it's revealed we were playing the wrong sport entirely. Marketing teams, especially in sectors like health and wellness, can no longer optimize only for humans. We must now please algorithms that quote, summarize, and synthesize instead of merely ranking and linking.

The digital landscape has shifted from the familiar territory of SEO (which is not dead) to an entirely new continent where the battle is for sentence-level visibility inside large language models. If you're still chasing viral growth hacks or parsing third-party guru tips, you're essentially trying to navigate with a map from a world that no longer exists.

Where AI Search Stands in 2025

When I last outlined the trajectory of AI-driven search, these systems were still auxiliary creatures—experimental layers that augmented the familiar ranking engines like Google Search or Bing. Today, LLMs are the search engine. Google's Gemini, Microsoft Copilot, ChatGPT with Browse, Claude, and Perplexity aren't just interfaces—they're the new gatekeepers of human knowledge.

These digital librarians ingest billions of documents, videos, PDFs, and social posts, then return compressed, synthesized outputs that often deliver what users need before they even think to click. We've entered the era of the "zero-step answer"—where the journey from question to satisfaction happens entirely within the AI's response.

This metamorphosis didn't emerge overnight like some digital Big Bang. Instead, it unfolded through a series of quietly revolutionary developments:

Google began rolling out AI Overviews to all US users in May 2024, though they appear in only about 12.7% of search results—a selective deployment that signals both caution and ambition.

Microsoft fully integrated Copilot across Edge, Windows, and Microsoft 365, creating an AI-first ecosystem that feels less like a feature and more like a fundamental shift in how we interact with information.

OpenAI's partnership with Reddit and ongoing Wikipedia ingestion gave ChatGPT access to the raw, unfiltered conversations that shape human understanding—the digital equivalent of eavesdropping on the world's most diverse coffee shop.

Claude began accepting document uploads directly and citing exact lines using its Citations API, transforming it from a conversational AI into something closer to a research assistant with perfect memory.

The fundamental architecture has evolved: search engines don't crawl and rank anymore. They retrieve, distill, and remix. Your marketing content isn't just getting indexed—it's being ingested, synthesized, and often quoted without generating a single click to your carefully crafted landing pages

Searchers Aren’t Browsing—They’re Asking

The behavioral mutation runs deeper than anyone initially predicted. Search has evolved from a retrieval system into something closer to an oracle—users arrive with complex, nuanced questions that would have been impossible to answer through traditional keyword matching.

Instead of typing "best probiotic for bloating," today's searcher asks: "What probiotics help with bloating during perimenopause, especially if I have IBS or SIBO, and I'm already taking magnesium supplements?"

This isn't just a longer query, it's a fundamentally different relationship with information. The question demands synthesis, domain expertise, and contextual understanding that can only emerge from analyzing thousands of sources simultaneously. LLMs were practically designed for exactly this kind of intellectual heavy lifting.

The journey from question to answer has collapsed into a single, atomic interaction. No more clicking through multiple sources, cross-referencing contradictory advice, or assembling fragments of information into a coherent picture. The AI does the synthesis work, presenting a personalized synthesis that feels almost conversational.

These behavioral shifts are creating measurable tremors throughout the search ecosystem. According to recent research from SparkToro and Datos, we're witnessing unprecedented search volume growth—21.64% year-over-year—even as the nature of those searches fundamentally transforms. Conversational modifiers like "for me," "for my child," and "for this context" now dominate long-tail queries, suggesting users are training AI systems to understand their specific circumstances rather than seeking generic information.

Another fascinating development: the rise of verification behavior. Users increasingly ask "where did this come from?" inside AI chat interfaces, making citation styling and source transparency central to building trust. The question isn't just what information appears, but how it's attributed—turning source credibility into a competitive advantage.

Perhaps most intriguingly, bounce behavior has fundamentally shifted. Users increasingly skim AI-generated summaries and never visit the original source sites, creating a peculiar paradox where your website's user experience might matter less than how easily your content can be digested and regurgitated by machines.

How AI Search Engines Surface Content

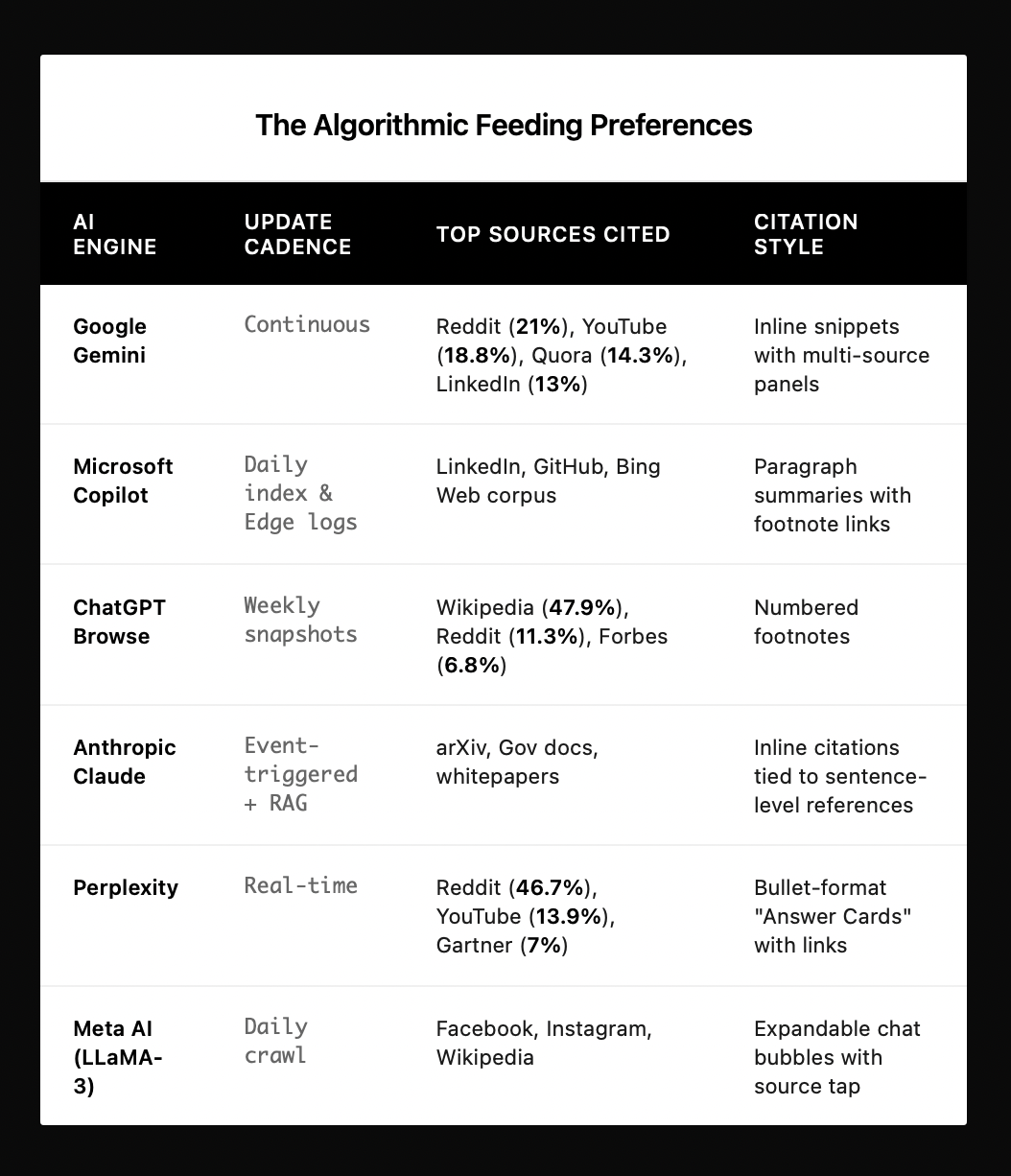

Each LLM-based engine has evolved its own peculiar appetite, developing distinct preferences for different types of content, sources, and citation styles. Understanding these algorithmic personalities has become essential for anyone hoping to remain visible in this new landscape.

The following analysis, based on Profound's research tracking 30 million AI citations, reveals the surprisingly diverse ecosystem of AI source preferences:

Each platform has developed not just different source preferences, but entirely different approaches to credibility, recency, and authority. For example, Gemini’s AI Overviews lean heavily into forums like Reddit and video transcripts from YouTube, while ChatGPT relies primarily on Wikipedia and high-authority journalistic outlets. Claude cites uploaded PDFs directly, giving an advantage to original research and in-depth white papers.

Synthesis-First Content Earns Visibility

To earn visibility in this new ecosystem, content must be architecturally designed for synthesis rather than human consumption alone. This represents a fundamental shift from SEO's traditional focus on user intent to something more nuanced: algorithmic digestibility.

The winning formula starts with what I call the "synthesis opener"—a clear, standalone definition or answer within the first two sentences that an LLM can extract and quote without requiring additional context. Think of it as writing the AI's summary for it, but making it so compelling that humans want to read further.

Supporting evidence becomes crucial in ways traditional SEO never demanded. First-party survey data, original research, and proprietary benchmarks don't just add authority—they create citeable moments that competing content simply can't replicate. Machines, it turns out, love exclusive data as much as human journalists do.

Freshness operates on an entirely different timeline in AI search. These systems deprioritize stale sources with algorithmic ruthlessness that makes Google's freshness algorithm look forgiving. Pages should be updated at least quarterly, with timestamped meta tags and schema updates that explicitly signal recency to crawling algorithms.

The structural requirements go deeper than traditional on-page optimization. Schema.org markups — specifically FAQ, How-To, Product, and ClaimReview — provide machine-readable logic that gives your content a significant advantage in AI selection algorithms. Pages without these enhancements are like books without indexes in a world where librarians have become speed-readers.

Platform-by-Platform Optimization Tactics

Each AI search engine has its unique mechanisms for ingesting and surfacing content. Understanding these differences is crucial for optimizing content visibility.

Google Gemini / AI Overviews

Update Cadence: Continuous crawl with real-time data integration

Top Citation Sources: Reddit, YouTube, Quora, LinkedIn

Citation Style: Inline links under multi-snippet panels

Despite Gemini's continuous crawling and real-time integration, it shows a fascinating preference for community-generated content over traditional authority sites. Reddit accounts for 21% of its top citations, while YouTube transcripts capture 18.8%, which suggests that conversational, authentic content often outperforms polished corporate messaging.

To optimize for Gemini:

Begin pages with concise, standalone definitions—imagine writing the perfect elevator pitch that could survive being shouted across a crowded room and still make sense.

Implement structured data using Schema.org markup (FAQ, HowTo, ClaimReview)— think of these as secret handshakes that help algorithms recognize your content as the real deal rather than digital noise.

Regularly update content to maintain freshness—Gemini's appetite for recency is more ruthless than a food critic at a week-old sushi bar.

Ensure robots.txt allows Gemini's user-agent—because even the most brilliant content is invisible if you've accidentally locked the door.

Microsoft Copilot (Bing Chat, Edge Sidebar)

Update Cadence: Daily index pulls and integration with Edge logs.

Top Citation Sources: Bing web corpus, with emphasis on LinkedIn and GitHub.

Citation Style: Paragraph answers with footnotes labeled "Learn more."

Copilot's integration with professional platforms like LinkedIn and GitHub creates an unexpected advantage for B2B content. The research from Profound shows it heavily favors workplace-oriented sources, making professional thought leadership more valuable than traditional consumer content.

To enhance visibility:

Incorporate structured elements like tables and step lists—Copilot devours structured information like a spreadsheet-obsessed analyst discovering patterns in chaos.

Add HTML <table> elements for specifications and <ol> for procedural content—these aren't just formatting choices, they're architectural decisions that help Copilot understand the logical bones of your expertise.

Ensure canonical tags are correctly implemented—because duplicate content confuses AI systems like multiple GPS signals confuse a lost driver, leading to algorithmic paralysis rather than citation glory.

OpenAI ChatGPT (Browse Mode)

Update Cadence: Weekly snapshots.

Top Citation Sources: Wikipedia, Reddit, Forbes, G2.

Citation Style: Numbered footnotes.

ChatGPT's overwhelming reliance on Wikipedia reveals a conservative approach to source credibility that prioritizes consensus over recency. This creates both opportunities and challenges for brands seeking visibility.

To optimize content:

Maintain accurate and well-sourced Wikipedia entries for your brand and products — Wikipedia isn't just an encyclopedia; for ChatGPT, it's the ultimate truth oracle, making your Wikipedia presence more valuable than a prime real estate listing.

Address citation gaps promptly—missing or outdated Wikipedia citations are like having a broken telephone number on your business card when your biggest customer is trying to call.

Align public information with internal data to ensure consistency — contradictions between your website and Wikipedia create algorithmic cognitive dissonance that ChatGPT resolves by simply ignoring you entirely.

Anthropic - Claude.ai

Update Cadence: Event-triggered Retrieval-Augmented Generation (RAG); accepts PDF uploads

Top Citation Sources: Government PDFs, arXiv papers, newswires

Citation Style: Bracketed inline URLs keyed to document passages

Claude's unique RAG approach and PDF upload capabilities create an unexpected opportunity for brands willing to invest in substantial, document-length content. Its preference for government PDFs, arXiv papers, and detailed whitepapers suggests it values depth over immediacy.

To optimize for Claude:

Host authoritative, document-length assets like research reports and policy whitepapers — Claude treats comprehensive documents like rare manuscripts in a digital library, favoring depth over the bite-sized content that satisfies other algorithms.

Ensure documents are in crawlable directories with plain-text abstracts — think of abstracts as the trailer that convinces Claude your full document is worth the computational effort to digest and remember.

Submit documents to platforms where Claude is active — because even the most brilliant research report is worthless if it's buried in a digital cave where no algorithm will ever stumble upon it.

Perplexity

Update Cadence: Near real-time

Top Citation Sources: Reddit, YouTube, Gartner

Citation Style: "Answer card" with bullet citations

Perplexity's Reddit obsession and real-time updating make it the most community-driven of the major AI search engines. Its citations from YouTube and review platforms suggest it prioritizes authentic, user-generated perspectives.

To optimize for Perplexity:

Engage actively on relevant Reddit subreddits — but authentically, like joining a neighborhood conversation rather than crashing a party with business cards and a megaphone.

Produce transcript-rich YouTube videos, both long-form content and YouTube Shorts, work beautifully as Perplexity treats video transcripts like searchable conversations that happen to have moving pictures attached.

Ensure content is valuable and not overtly promotional. Perplexity's real-time nature means it can smell marketing desperation from across the internet, preferring genuine expertise over polished corporate speak.

Meta AI (LLaMA-3)

Update Cadence: Daily corpus refresh

Top Citation Sources: Public Facebook and Instagram posts, captions, and Wikipedia

To optimize for Meta:

Write Instagram captions as mini-FAQs—state the question the image answers, then supply the answer in 50 words—turning your visual content into digestible knowledge packets that Meta AI can quote like tiny wisdom bombs.

Add descriptive alt-text to images, naming the concept clearly because Meta AI reads images through text descriptions like a digital archaeologist reconstructing artifacts from inventory notes.

Creating a Measurement Framework When Clicks Disappear

The old metrics tell beautiful lies about ugly truths. Traditional SEO lived and died by impressions, clicks, and sessions—numbers that made sense when search was about navigation rather than answers. But in a zero-click ecosystem where nearly 60% of searches end without any clicks, those familiar KPIs become archaeological artifacts from a simpler time.

In this new landscape, you're measuring influence without visits, recall without UTMs, and conversion paths that start with a sentence, not a session. Your content might be paraphrased invisibly, quoted without attribution, or referenced in AI-generated summaries that lead to assisted conversions days or weeks later.

Here's the measurement reality that matters:

Let’s go deeper into each and how to use them to justify budgets, track performance, and optimize for relevance—not just traffic.

Citation Share by Platform

This is your share of voice across the new AI surface area. It answers the question: How often is my content being quoted by Gemini, Copilot, Claude, or ChatGPT compared to competitors on the same topic?

Tools like Profound and SparkSense track domain-level appearances in AI outputs. Some enterprises run custom LLM prompt scrapers that simulate user questions and analyze returned citations.

What to do with this metric:

Benchmark monthly for your key revenue-driving topics. If your domain is showing up in <5% of answer outputs compared to competitors, it's time to act.

Refresh page content: tighten answer blocks, add structured data, or improve source freshness.

Prioritize PR and citation-worthy assets (original research, clear definitions, etc.).

If you can't access a third-party tool, you can simulate this manually with a prompt battery in each major LLM and track who gets cited. It's labor-intensive but will surface both visibility gaps and new content opportunities — like digital archaeology revealing which competitors have quietly colonized your topic territory.

Zero-Click Impressions

Found directly in Google Search Console (GSC), this metric reveals when Gemini is serving your content without generating a click. These are signals that you're in the AI Overviews, but not driving sessions—either because the LLM is quoting you without attribution or because the answer satisfies the user instantly.

How to track:

In GSC, export query-level data.

Filter where impressions > 0 and clicks = 0.

Group by URL or page topic and look for rising impression curves with flat sessions.

Use cases:

Flag content that is informationally rich but not quote-worthy — restructure to include crisp answer-first summaries.

Validate that your pages are feeding the machine, even if they're not bringing traffic.

Pair with brand search volume to detect latent awareness lift from AI citations.

This is also where on-page entity linking and strong branding become important. If users read about you in an Overview but don't click, they might return days later through direct or branded queries. This lifts recall even if your session count stays flat—like planting seeds in algorithmic consciousness that bloom weeks later in completely different contexts.

Answer Velocity

Answer Velocity is how quickly your page is picked up and quoted—or dropped—by LLMs after content changes. Unlike classic SEO, which may take weeks to reflect in SERPs, LLM visibility often shifts within hours or days of a refresh.

Ways to monitor:

Log your most quoted answers inside engines like Gemini, Perplexity, or Claude.

Track whether those summaries are updated after you modify your content.

Tools like Profound will track quote timestamps and decay rates.

If a quote hasn't changed in 30–45 days and your competitors are gaining share, it's likely time to:

Update your statistics or product claims

Change schema (e.g., from FAQ to How-To, if a more task-based query is winning)

Issue a new structured block or embed a recent citation-worthy insight

Claude and ChatGPT's models tend to favor recency and updated references, while Perplexity reflects changes almost in real time due to its live indexing. Understanding these different metabolisms helps you time your content refreshes like a master chef coordinating multiple dishes—each algorithm has its own optimal serving temperature.

Assisted Conversions from Zero-Click Exposure

Zero-click impressions still drive revenue—but often not in linear or trackable ways. Users may see your brand mentioned in an AI response and return later via direct, email, or brand search. These assisted conversions are where traditional attribution fails.

To fix that:

In GA4, enable 28-day attribution windows.

Use the "Conversion Paths" and "Model Comparison" reports to look at first-touch interactions that began with zero-click queries.

Compare branded search or direct channel lifts after major content pushes or AI visibility spikes.

For more precise analysis:

Use Triple Whale, SegMetrics, or Northbeam to track cohorts over time.

Measure if branded search queries increase after you launch new data-rich content (e.g., a menopause supplement guide or hormone therapy PDF that gets surfaced in ChatGPT or Claude).

These assisted paths become critical in proving ROI from LLM optimization—even if you don't see last-click revenue in your dashboards. It's like tracking the influence of a great conversation at a dinner party: the immediate impact might be invisible, but the long-term relationship shifts can reshape entire business trajectories.

Don’t Just Publish…Train the Machine

Relevance has replaced rank as the ultimate currency. In the era of AI-native search, visibility is won at the sentence level, not the page level. Your content must speak clearly to machines while remaining compelling to humans — a dual audience that demands both precision and personality.

Feed the machines. Write to be quoted. And understand that in 2025, publishing is not about traffic — it's about trust and training data that positions your voice in the algorithmic conversations that increasingly shape human understanding.